Get live statistics and analysis of vLLM's profile on X / Twitter

A high-throughput and memory-efficient inference and serving engine for LLMs. Join slack.vllm.ai to discuss together with the community!

The Innovator

vLLM is a cutting-edge engine designed to revolutionize large language model (LLM) inference with remarkable speed and memory efficiency. This profile thrives on pushing the boundaries of AI technology, collaborating openly with the community to accelerate innovation. It’s the go-to hub for developers and enthusiasts eager to scale and streamline LLM deployment.

Top users who interacted with vLLM over the last 14 days

Daily posts on AI , Tech, Programing, Tools, Jobs, and Trends | 500k+ (LinkedIn, IG, X) Collabs- abrojackhimanshu@gmail.com

Founder and CEO of Z360

We make AI models Dolphin and Samantha BTC 3ENBV6zdwyqieAXzZP2i3EjeZtVwEmAuo4 ko-fi.com/erichartford dphn.ai @dphnAI

Automation specialist

Founder of Winninghealth AI lab Researcher in AI in healthcare, HIT, Biomedical engineering, etc.

model personality | prev: sent a human to space | Oxford

modeling language at @allen_ai

Cofounder and Head of Post Training @NousResearch, prev @StabilityAI Github: github.com/teknium1 HuggingFace: huggingface.co/teknium

Every age, it seems, is tainted by the greed of men. Rubbish to one such as I, devoid of all worldly wants. — I work on HPC and making AI run faster.

NLP Scientist | AutoAWQ Creator | Open-Source Contributor

apaz.dev Making GPUs go brrr

You’re so deep in the code and optimization rabbit hole, you probably benchmark your coffee breaks and cache your lunch – proving even your downtime is more efficient than most people’s entire workday.

Supporting DeepSeek-R1’s RL training and inference, which was featured as a cover article in Nature, marks a landmark achievement showing vLLM’s real-world scientific impact and cutting-edge capabilities.

To drive forward the frontier of AI inference technology by creating tools that are not only powerful and efficient but also accessible to a broad community, enabling widespread advancement and adoption of large language models.

vLLM values open source collaboration, transparency, and the power of community-driven innovation. This profile believes that sharing advancements openly accelerates progress and that technology should be built for scalability and real-world impact. Efficiency and accessibility are key principles guiding its development philosophy.

Exceptional technical prowess in optimizing and delivering high-throughput, memory-efficient AI inference solutions. Strong community engagement through open-sourcing and responsive feature development fuels innovation and trust.

May sometimes lean heavily into technical depth and niche topics, potentially alienating non-expert followers or those seeking simpler entry points. Also, the follower count is undefined, indicating a potential opportunity to boost audience visibility.

To grow the audience on X, vLLM should mix educational content with engaging storytelling — demystify complex features with visuals, quick demos, or relatable analogies. Collaborate more with influencers in AI and tech communities, and spotlight real-world use cases to widen appeal beyond just the hardcore developers.

Fun fact: vLLM’s engine powers fast OCR and multimodal inference projects like DeepSeek-OCR, achieving up to 2500 tokens per second on an A100 GPU – blazing fast for LLM tasks!

Top tweets of vLLM

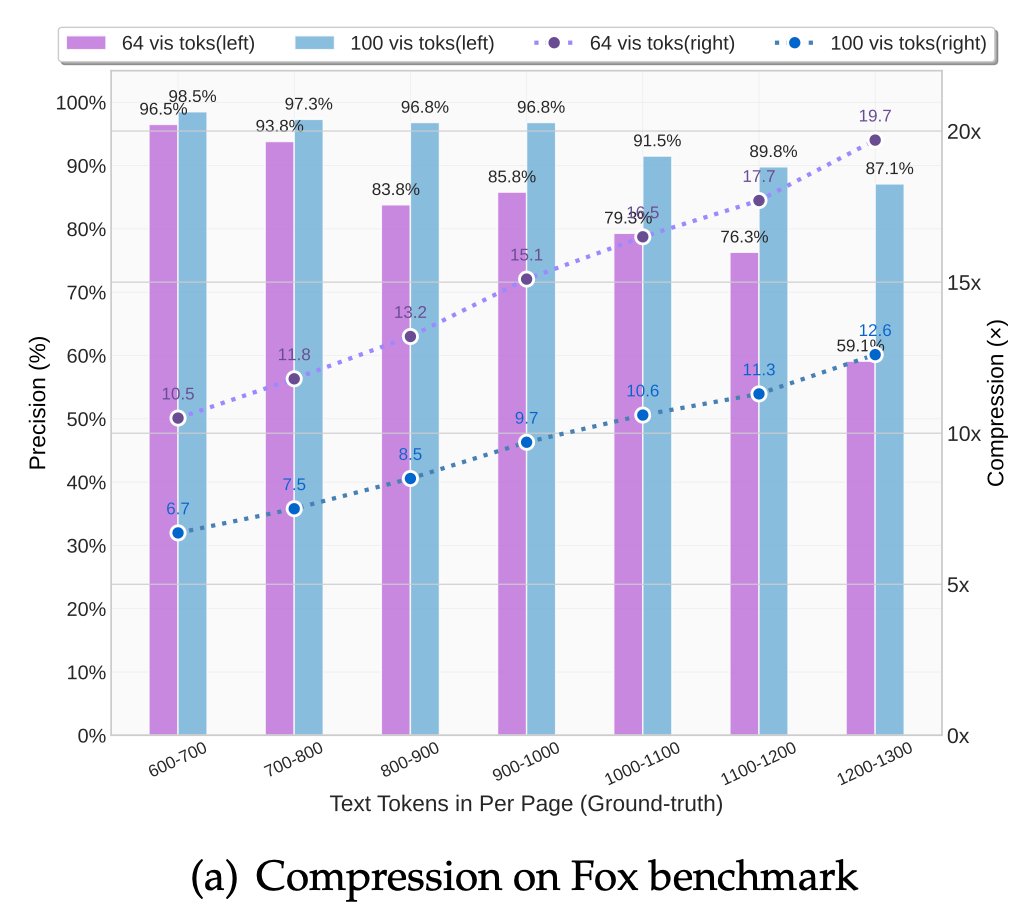

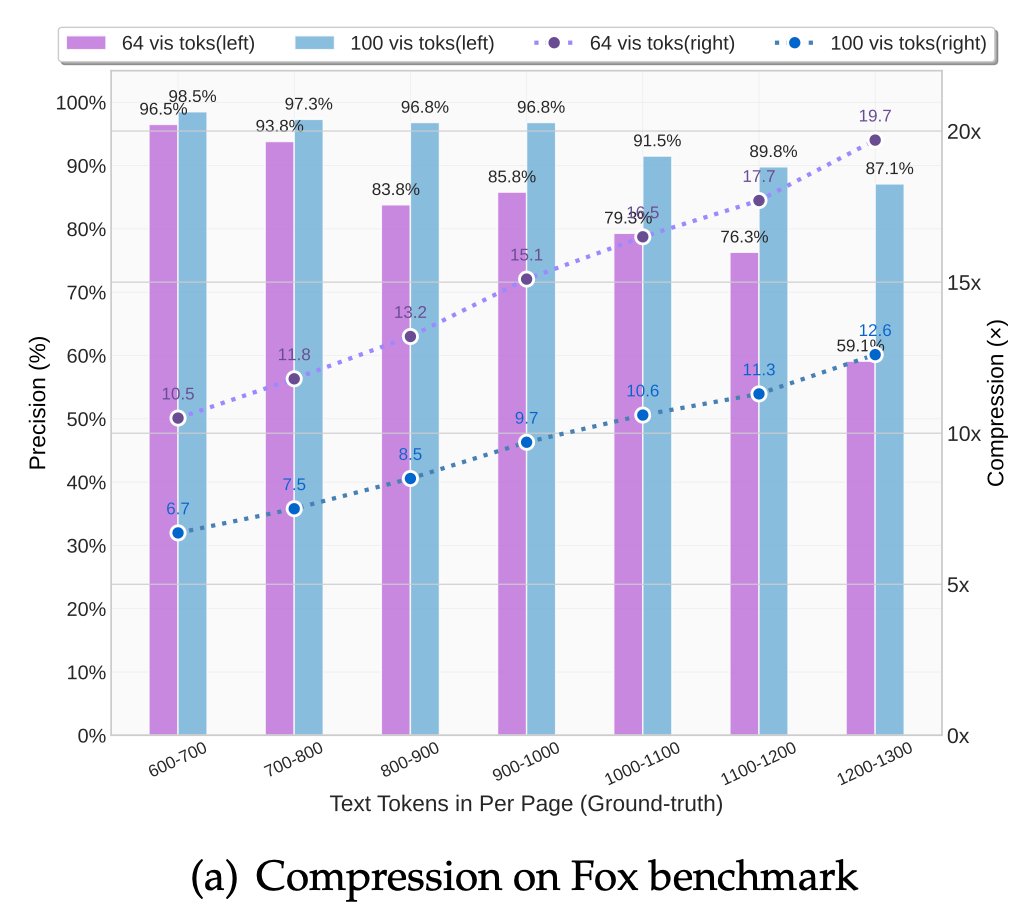

🚀 DeepSeek-OCR — the new frontier of OCR from @deepseek_ai , exploring optical context compression for LLMs, is running blazingly fast on vLLM ⚡ (~2500 tokens/s on A100-40G) — powered by vllm==0.8.5 for day-0 model support. 🧠 Compresses visual contexts up to 20× while keeping 97% OCR accuracy at <10×. 📄 Outperforms GOT-OCR2.0 & MinerU2.0 on OmniDocBench using fewer vision tokens. 🤝 The vLLM team is working with DeepSeek to bring official DeepSeek-OCR support into the next vLLM release — making multimodal inference even faster and easier to scale. 🔗 github.com/deepseek-ai/De… #vLLM #DeepSeek #OCR #LLM #VisionAI #DeepLearning

🚀 Amazing community project! vLLM CLI — a command-line tool for serving LLMs with vLLM: ✅ Interactive menu-driven UI & scripting-friendly CLI ✅ Local + HuggingFace Hub model management ✅ Config profiles for perf/memory tuning ✅ Real-time server & GPU monitoring ✅ Error logs & recovery 📦 Install in one line: pip install vllm-cli GitHub: github.com/Chen-zexi/vllm… 👉 Would you like to see these features in vLLM itself? Try it out & share feedback!

Announcing the completely reimagined vLLM TPU! In collaboration with @Google, we've launched a new high-performance TPU backend unifying @PyTorch and JAX under a single lowering path for amazing performance and flexibility. 🚀 What's New? - JAX + Pytorch: Run PyTorch models on TPUs with no code changes, now with native JAX support. - Up to 5x Performance: Achieve nearly 2x-5x higher throughput compared to the first TPU prototype. - Ragged Paged Attention v3: A more flexible and performant attention kernel for TPUs. - SPMD Native: We've shifted to Single Program, Multi-Data (SPMD) as the default, a compiler-centric model native to TPUs for optimal execution. Dive deep into the new architecture and see the performance benchmarks in our latest blog post! blog.vllm.ai/2025/10/16/vll… #vLLM #TPU #JAX #PyTorch #AI #OpenSource

🚀 New in vLLM: dots.ocr 🔥 A powerful multilingual OCR model from @xiaohongshu hi lab is now officially supported in vLLM! 📝 Single end-to-end parser for text, tables (HTML), formulas (LaTeX), and layouts (Markdown) 🌍 Supports 100 languages with robust performance on low-resource docs ⚡ Compact 1.7B VLM, but achieves SOTA results on OmniDocBench & dots.ocr-bench ✅ Free for commercial use Deploy it in just two steps: uv pip install vllm --extra-index-url wheels.vllm.ai/nightly vllm serve rednote-hilab/dots.ocr --trust-remote-code Try it today and bring fast, accurate OCR to your pipelines. Which models would you like to see next in vLLM? Drop a comment ⬇️

it’s tokenization again! 🤯 did you know tokenize(detokenize(token_ids)) ≠ token_ids? RL researchers from Agent Lightning coined the term Retokenization Drift — a subtle mismatch between what your model generated and what your trainer thinks it generated. why? because most agents call LLMs via OpenAI-compatible APIs that only return strings, so when those strings get retokenized later, token splits may differ (HAV+ING vs H+AVING), tool-call JSON may be reformatted, or chat templates may vary. → unstable learning, off-policy updates, training chaos. 😬 (@karpathy has a great video explaining all details about tokenization 👉🏻 youtube.com/watch?v=zduSFx… ) together with the Agent Lightning team at Microsoft Research, we’ve fixed it: vLLM’s OpenAI-compatible endpoints can return token IDs directly. just add "return_token_ids": true to your /v1/chat/completions or /v1/completions request, and you’ll get both prompt_token_ids and token_ids along with normal text outputs. no more drift. no more mismatch. your agent RL now trains exactly on what it sampled. read more from the blog 👇 👉 blog.vllm.ai/2025/10/22/age… #vLLM #AgentLightning #RL #LLMs #OpenAIAPI #ReinforcementLearning

🚀 vLLM x MinerU: Document Parsing at Lightning Speed! We’re excited to see MinerU fully powered by vLLM — bringing ultra-fast, accurate, and efficient document understanding to everyone. ⚡ Powered by vLLM’s high-throughput inference engine, MinerU 2.5 delivers: Instant parsing, no waiting Deeper understanding for complex docs Optimized cost — even consumer GPUs can fly Experience the new speed of intelligence: 👉 github.com/opendatalab/Mi… #vLLM #MinerU #AI #LLM #DocumentParsing #AIresearch

Most engaged tweets of vLLM

🚀 DeepSeek-OCR — the new frontier of OCR from @deepseek_ai , exploring optical context compression for LLMs, is running blazingly fast on vLLM ⚡ (~2500 tokens/s on A100-40G) — powered by vllm==0.8.5 for day-0 model support. 🧠 Compresses visual contexts up to 20× while keeping 97% OCR accuracy at <10×. 📄 Outperforms GOT-OCR2.0 & MinerU2.0 on OmniDocBench using fewer vision tokens. 🤝 The vLLM team is working with DeepSeek to bring official DeepSeek-OCR support into the next vLLM release — making multimodal inference even faster and easier to scale. 🔗 github.com/deepseek-ai/De… #vLLM #DeepSeek #OCR #LLM #VisionAI #DeepLearning

Announcing the completely reimagined vLLM TPU! In collaboration with @Google, we've launched a new high-performance TPU backend unifying @PyTorch and JAX under a single lowering path for amazing performance and flexibility. 🚀 What's New? - JAX + Pytorch: Run PyTorch models on TPUs with no code changes, now with native JAX support. - Up to 5x Performance: Achieve nearly 2x-5x higher throughput compared to the first TPU prototype. - Ragged Paged Attention v3: A more flexible and performant attention kernel for TPUs. - SPMD Native: We've shifted to Single Program, Multi-Data (SPMD) as the default, a compiler-centric model native to TPUs for optimal execution. Dive deep into the new architecture and see the performance benchmarks in our latest blog post! blog.vllm.ai/2025/10/16/vll… #vLLM #TPU #JAX #PyTorch #AI #OpenSource

🚀 New in vLLM: dots.ocr 🔥 A powerful multilingual OCR model from @xiaohongshu hi lab is now officially supported in vLLM! 📝 Single end-to-end parser for text, tables (HTML), formulas (LaTeX), and layouts (Markdown) 🌍 Supports 100 languages with robust performance on low-resource docs ⚡ Compact 1.7B VLM, but achieves SOTA results on OmniDocBench & dots.ocr-bench ✅ Free for commercial use Deploy it in just two steps: uv pip install vllm --extra-index-url wheels.vllm.ai/nightly vllm serve rednote-hilab/dots.ocr --trust-remote-code Try it today and bring fast, accurate OCR to your pipelines. Which models would you like to see next in vLLM? Drop a comment ⬇️

it’s tokenization again! 🤯 did you know tokenize(detokenize(token_ids)) ≠ token_ids? RL researchers from Agent Lightning coined the term Retokenization Drift — a subtle mismatch between what your model generated and what your trainer thinks it generated. why? because most agents call LLMs via OpenAI-compatible APIs that only return strings, so when those strings get retokenized later, token splits may differ (HAV+ING vs H+AVING), tool-call JSON may be reformatted, or chat templates may vary. → unstable learning, off-policy updates, training chaos. 😬 (@karpathy has a great video explaining all details about tokenization 👉🏻 youtube.com/watch?v=zduSFx… ) together with the Agent Lightning team at Microsoft Research, we’ve fixed it: vLLM’s OpenAI-compatible endpoints can return token IDs directly. just add "return_token_ids": true to your /v1/chat/completions or /v1/completions request, and you’ll get both prompt_token_ids and token_ids along with normal text outputs. no more drift. no more mismatch. your agent RL now trains exactly on what it sampled. read more from the blog 👇 👉 blog.vllm.ai/2025/10/22/age… #vLLM #AgentLightning #RL #LLMs #OpenAIAPI #ReinforcementLearning

🚀 Amazing community project! vLLM CLI — a command-line tool for serving LLMs with vLLM: ✅ Interactive menu-driven UI & scripting-friendly CLI ✅ Local + HuggingFace Hub model management ✅ Config profiles for perf/memory tuning ✅ Real-time server & GPU monitoring ✅ Error logs & recovery 📦 Install in one line: pip install vllm-cli GitHub: github.com/Chen-zexi/vllm… 👉 Would you like to see these features in vLLM itself? Try it out & share feedback!

People with Innovator archetype

Believe in God he will take care of you

🆕 Searching for the next B2B use case of AI ✨ 10Y+ Product & Software Engineer | CEO at assetplan.co.uk

aka.ms/Build25_BRK165 Designing the new era of intelligent applications, currently as PM for @msftcopilot 365 extensibility. All opinions are mine.

CRYPTO ENTHUSIAST ➡️ HOSPITALITY MANAGER➡️ PROTOCOL TESTOR➡️ CRYPTO IS FRREEDOM

Staff @Kimi_Moonshot prev. co-maker of ModelizeAI & gemsouls "Personality goes a long way" @UCSanDiego

The first onchain equity layer. Building decentralized ownership for all. Use → earn → own. Powered by next gen DEX (BETA) on @Solana. Alerts: @GlydoAlerts 🤖

Founded isoHunt, WonderSwipe. AI shepherd at @boomtv @StarpowerAI. 70% engineering 30% product

💎 Crypto builder | $ULAB $BOB $RAYLS $SolvBTC $GLNT | DeFi strategist | HODL dreams, execute moves | #BlockchainLife

@LinkfinderOK pour trouver des liens au meilleur prix grâce à l'IA agenceonze.fr pour déléguer ton SEO à une équipe solide d'experts SEO seniors

Building an AI content architect to automate media engagement & empower creators. Sharing insights on AI, community, & building in public.

building @bunjavascript. formerly: @stripe (twice) @thielfellowship. high school dropout. npm i -g bun

Explore Related Archetypes

If you enjoy the innovator profiles, you might also like these personality types: