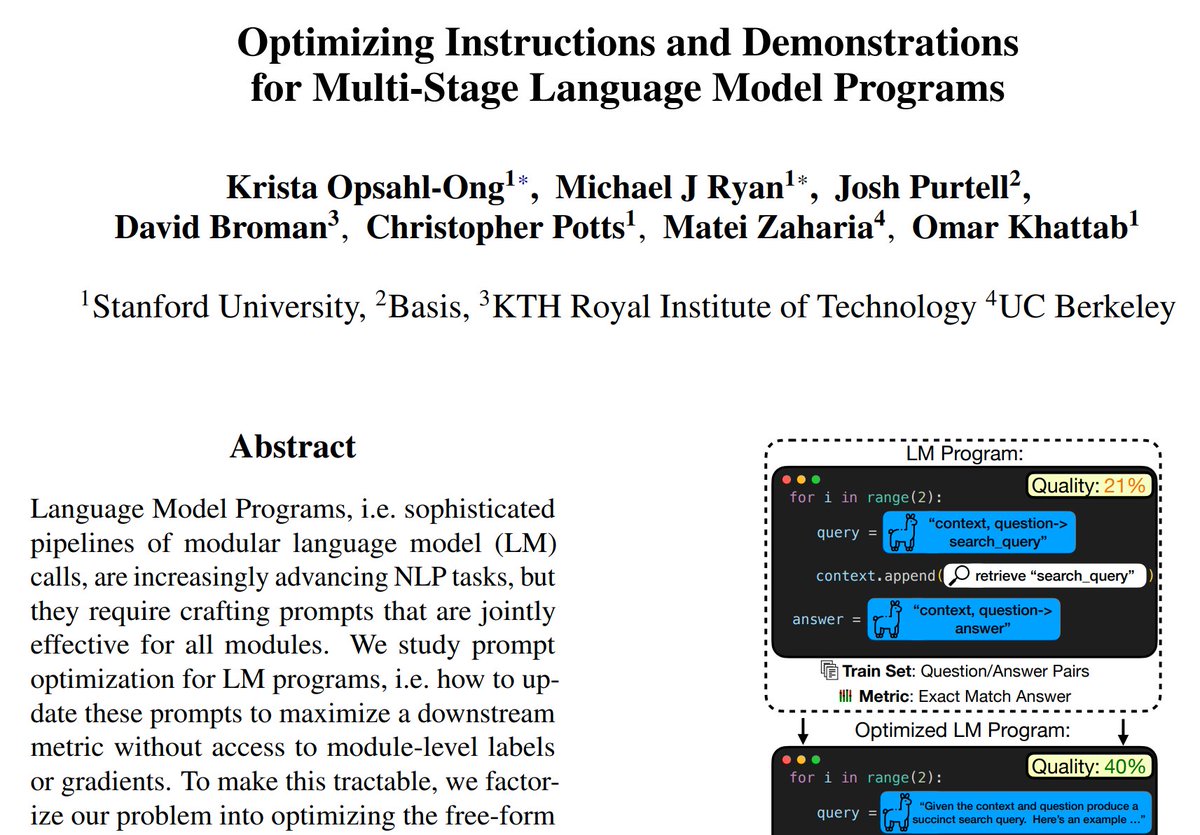

Get live statistics and analysis of Omar Khattab's profile on X / Twitter

Asst professor @MIT EECS & CSAIL (@nlp_mit). Author of ColBERT.ai and DSPy.ai (@DSPyOSS). Prev: CS PhD @StanfordNLP. Research @Databricks.

The Thought Leader

Omar Khattab is an assistant professor at MIT EECS & CSAIL, sharing deep insights into NLP, ML systems, and AI research. With a prolific tweet presence and broad academic influence, he consistently bridges cutting-edge research with practical knowledge for both students and fellow researchers. His Twitter is a dynamic mix of thoughtful commentary, research updates, and mentorship calls.

Sure, Omar tweets like he’s debugging a giant AI, but with 10,191 tweets, you’d think he’d have fixed that one typo from 2014 by now. At this rate, even his retweets get their own PhD thesis.

Successfully transitioning from Stanford NLP PhD to an MIT CSAIL assistant professorship, all while maintaining an influential and highly engaged online presence that shapes AI discourse.

To advance the frontier of AI and NLP research while cultivating the next generation of computer scientists through impactful teaching and open knowledge sharing.

Omar values rigorous research, transparency, and the democratization of AI knowledge. He believes impactful science is grounded in accessible tools, open-source collaboration, and clear communication that demystifies complex AI concepts.

His academic credibility combined with active and engaging communication creates a strong, trusted voice in the AI research community. He knows how to translate dense research topics into accessible ideas without losing nuance.

His very technical and research-heavy focus may limit engagement with broader, less specialized audiences and sometimes results in fewer casual or light-hearted interactions, which could restrict community growth outside academia.

To grow his audience on X, Omar could experiment with more approachable content like AI myths debunked, behind-the-scenes of research life, or quick tips for students. Engaging more in conversations and Q&A threads could also amplify his reach beyond academia.

Fun fact: Omar’s prolific tweet count (over 10,000) means he’s likely shared more AI insights on Twitter than some entire textbooks contain, making his feed a goldmine for AI enthusiasts and scholars alike!

Top tweets of Omar Khattab

Most engaged tweets of Omar Khattab

People with Thought Leader archetype

18. Developer. SaaS

Build World Scale Products | Play Infinite Games | Health: systema.health | @v0 ambassador

Former Microsoft Dev | Coding & Robotics Insight | Windows ESC | AI Enthusiast | Tech Tips | Vibe Coding | Content Creator | Premium+ User | Grok xAI Red Team

Naturally Curious | Building Suitable AI

A passionate writer. Just from my heart to you (see link). || COPYWRITER. With captivating, constructive and strategic copy to put your brand at the forefront.

Solo founder 🤹 | Waiting for my - OVERNIGHT SUCCESS ⏳| Built Digital desktop clock - thedigitalclock.com

Visiting Researcher @MIT_CSAIL. PhD student @NotreDame advised by @Meng_CS. Creator of Arbor RL library for @DSPyOSS

前端輕鬆聊是一個在溫哥華科技公司擔任資深前端工程師 Eric @sdusteric 所主持的頻道。想帶給聽眾前端的知識、一些你可能不知道的tips、工程師的職涯發展以及國外第一手前端技術的新聞。 想要跟我一起變成更好的工程師,記得follow喔!

Father of three, Creator of Ruby on Rails + Omarchy, Co-owner & CTO of 37signals, Shopify director, NYT best-selling author, and Le Mans 24h class-winner.

From idea → live product: sideframe.app 🎉 Solo founder sharing wins, fails & lessons. Prev: TextMuse AI

Product & Growth @ Softgen.ai. Helping builders turn ideas into apps with AI.

Explore Related Archetypes

If you enjoy the thought leader profiles, you might also like these personality types: