Get live statistics and analysis of Connor Shorten's profile on X / Twitter

AI and Databases @weaviate_io

The Innovator

Connor Shorten is a pioneering force in the AI and database space, making waves with cutting-edge research and software innovations like DSPy. His passion for structuring and optimizing AI workflows comes alive through insightful and educational content aimed at advancing large language model applications. Innovatively blending research with practical tools, Connor is transforming how AI systems are built and understood.

Connor’s Twitter feed looks like a doctoral thesis that got stuck in tweet mode�—perfect for those who want a PhD in AI just by scrolling. Maybe tone it down to one chatbot factoid at a time, and the rest of us mere mortals might keep up!

Connor’s biggest win is successfully launching DSPy, a novel AI programming model and compiler that offers unprecedented control and optimization of large language model workflows—making a tangible impact on the future of AI tooling.

Connor's life purpose centers on pushing the boundaries of AI technology, making complex systems more accessible and effective, and empowering developers with tools that unlock the full potential of large language models. He aims to catalyze the next wave of innovation in AI programming by bridging theoretical research with impactful software solutions.

He believes that clarity in problem definition and the power of iterative refinement are key to building reliable AI systems. Connor holds that collaboration, shared knowledge, and open research accelerate innovation. He values precision, structured thinking, and the careful orchestration of complex AI components to ensure consistent and effective outputs.

Connor excels at deep technical expertise combined with clear and thorough communication. His ability to break down complex AI concepts into engaging threads and tutorials is remarkable. He shows a knack for pioneering novel programming paradigms and pushing forward experimental research into practical tools.

Sometimes, his communication can be a bit dense or overly technical for casual followers, which might limit broader accessibility. His intense focus on innovation could occasionally lead to overcomplicating solutions where simpler approaches might suffice.

To grow his audience on X, Connor should consider weaving more bite-sized, simplified insights and use-cases that appeal to a wider audience beyond hardcore AI practitioners. Engaging visuals, quick tips, and interactive Q&A sessions could boost follower interaction and attract developers at various experience levels.

Fun fact: Connor doesn't just talk about programming models like DSPy—he builds compilers that optimize AI instruction chains, taking inspiration from frameworks like PyTorch to revolutionize LLM programming!

Top tweets of Connor Shorten

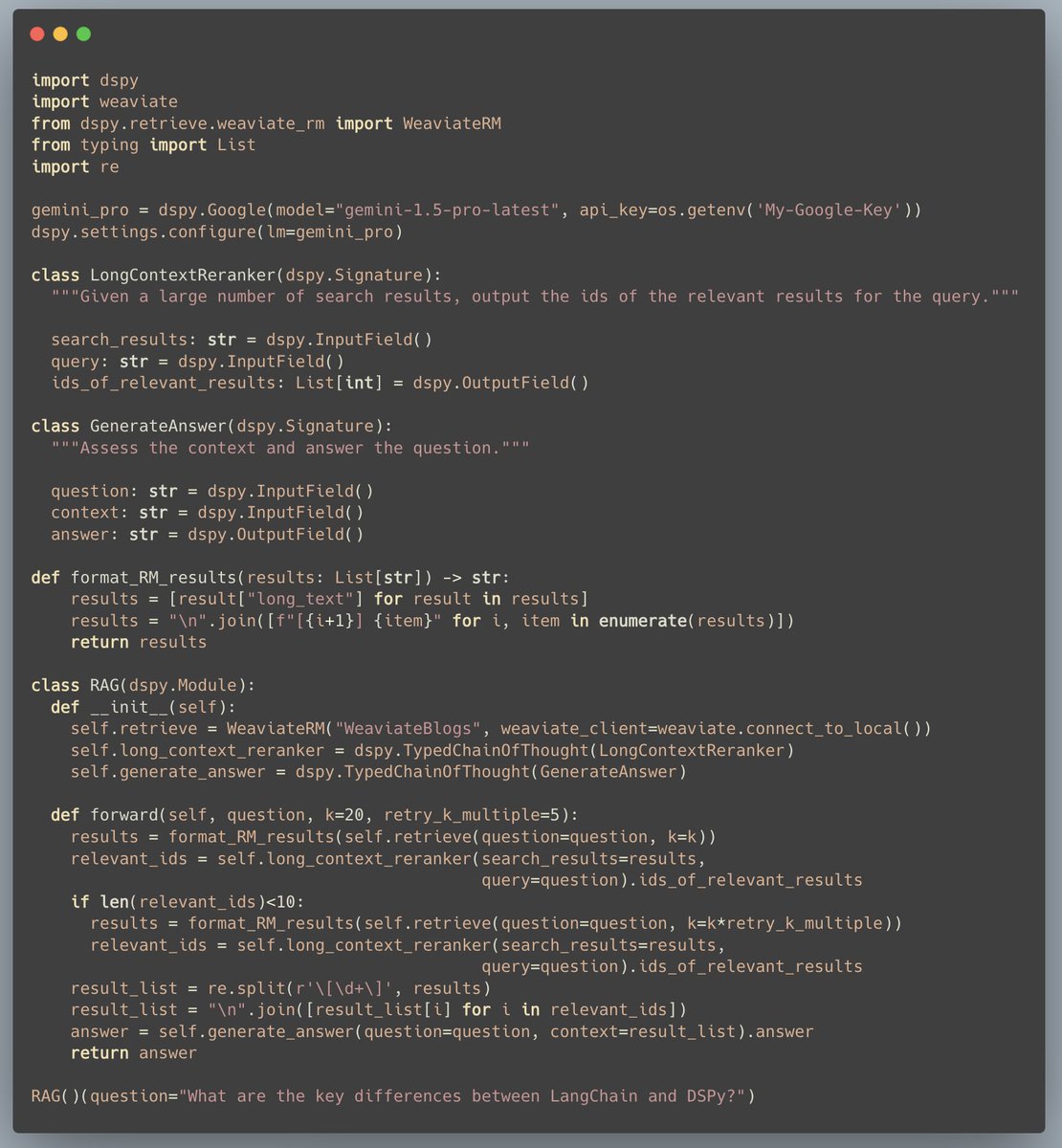

DSPy is a SUPER exciting advancement for AI and building applications with LLMs!🧩🤯 Pioneered by frameworks such as LangChain and LlamaIndex, we can build much more powerful systems by chaining together LLM calls! This means that the output of one call to an LLM is the input to the next, and so on. We can think of chains as programs, with each LLM call analogous to a function that takes text as input and produces text as output. DSPy offers a new programming model, inspired by PyTorch, that gives you a massive amount of control over these LLM programs. Further the Signature abstraction wraps prompts and structured input / outputs to clean up LLM program codebases. 🧼 DSPy then pairs the syntax with a super novel *compiler* that jointly optimizes the instructions for each component of an LLM program, as well as sourcing examples of the task. ⚙️ Here is my review of the ideas in DSPy, covering the core concepts and walking through the introduction notebooks showing how to compile a simple retrieve-then-read RAG program, as well as a more advanced Multi-Hop RAG program where you have 2 LLM components to be optimized with the DSPy compiler! I hope you find it useful! 🎙️ youtube.com/watch?v=41EfOY…

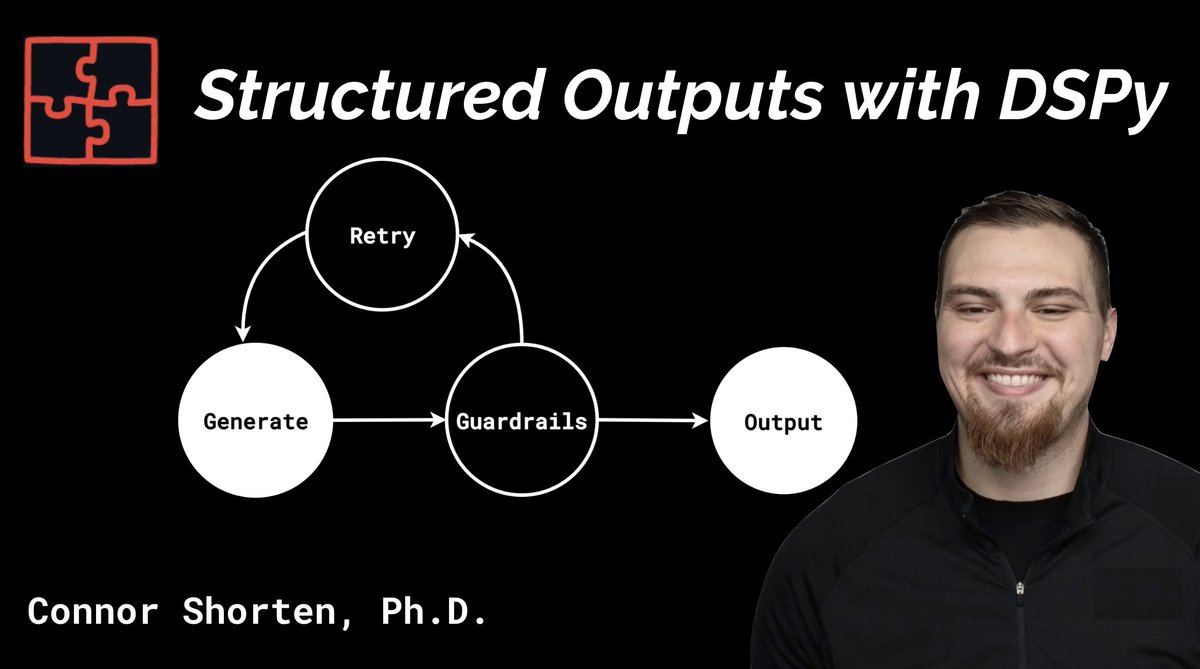

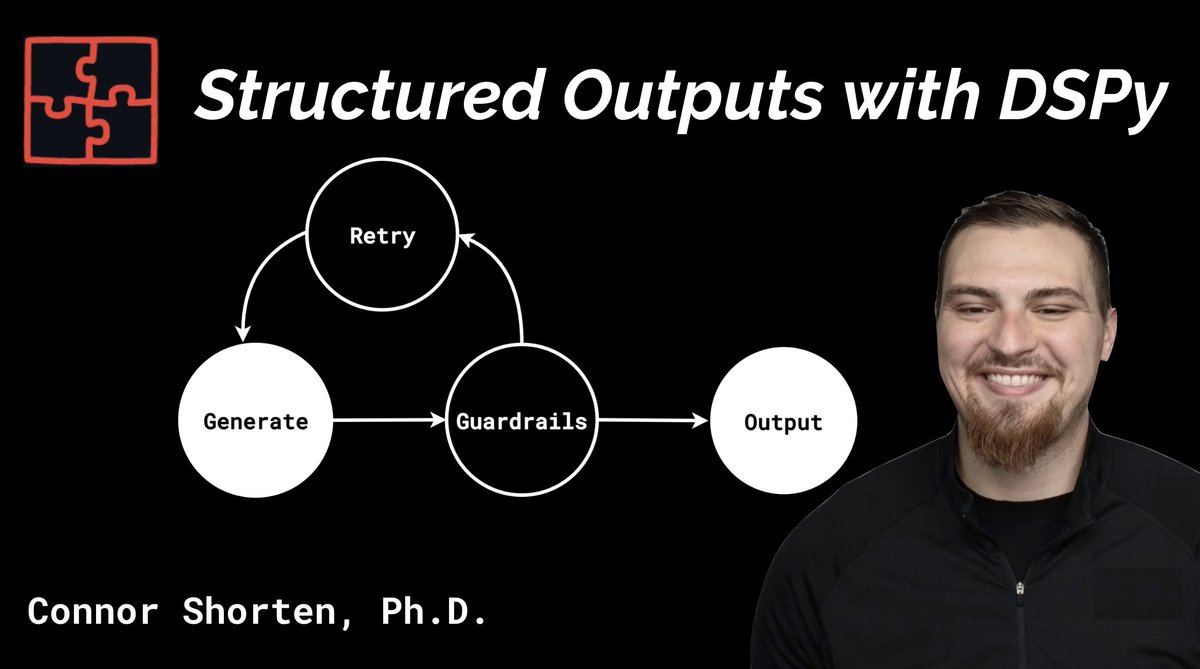

Unfortunately, Large Language Models will not consistently follow the instructions that you give them. This is a massive problem when you are building AI systems that require a particular type of output from the previous step to feed into the next one! For example, imagine you are building a blog post writing system that first takes a question and retrieved context to output a list of topics. These topics have to be formatted in a particular way, such as a comma-separated list or a JSON of Topic objects, such that the system can continue writing the blog post! 🤝 I am SUPER excited to share the 4th video in my DSPy series, diving into 3 solutions to structuring outputs in DSPy programs: (1) TypedPredictors, (2) DSPy Assertions, and (3) Custom Guardrails with the DSPy programming model! 📖 TypedPredictors follow the line of thinking around JSON mode and using Pydantic BaseModels to interface types and custom objects into a JSON template for LLMs. The output can then be validated to provide a more structured retry prompt to correct the output structure! 🛠️ DSPy Assertions are one of the core building blocks of DSPy, offering an interface to input a boolean-valued function and a retry prompt which is templated alongside the past output to retry the call to the LLM! ⚠️♻️ Custom Guardrails with the DSPy Programming Model are one of the things I love the most about DSPy — we have unlimited flexibility to control these systems however we want. The video will also show you how to write custom guardrails and retry Signatures and discussion around using TypedPredictors for your Custom Guardrails and potentially feeding your Custom Guardrails into a DSPy Assertion. 💥 I had so much fun exploring this topic! Further seeing how well OpenAI’s GPT-4 and GPT-3.5-Turbo, Cohere’s Command R, and Mistral 7B hosted with Ollama perform with each Structured Output strategy! I also found monitoring structured output retries to be another fantastic application of Arize Phoenix! I hope you find the video useful! 🎙️ youtube.com/watch?v=tVw3Cw…

Open-sourcing retrieve-dspy! 💻🚀 While developing Search Mode for Weaviate's Query Agent, we dove into the literature. It was amazing, and overwhelming, to see how many different takes on Compound Retrieval Systems there are! 📚 From perspectives on Reranking, such as to reason, or not to reason with Cross Encoders, Sliding Window Listwise Rankers, Top-Down Partitioning, Pairwise Ranking Policies, .... to Query Expansion, such as HyDE, LameR, ThinkQE, ..., Query Decomposition, Multi-Hop Retrieval, Adaptive Retrieval, and more... There are endless design decisions for building Compound Retrieval Systems!! 🛠️ Inspired by the work on LangProbe from @ShangyinT et al., retrieve-dspy is a collection of DSPy programs from the IR literature. I hope this work will help us better compare these systems such as HyDE vs. ThinkQE, or Cross Encoders vs. Sliding Window Listwise Reranking, and understand the impact of DSPy's optimizers! 🧩🔥 The first step of many, repo linked below!

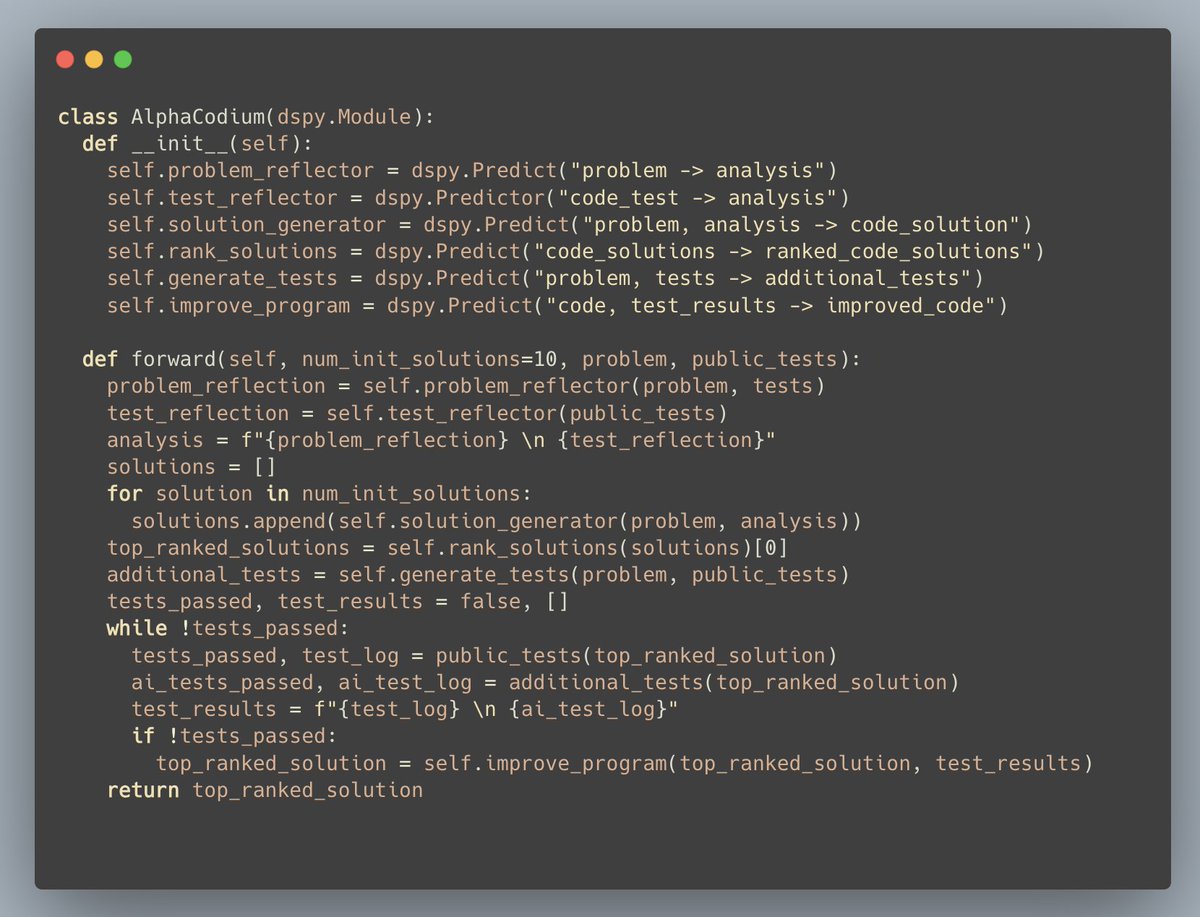

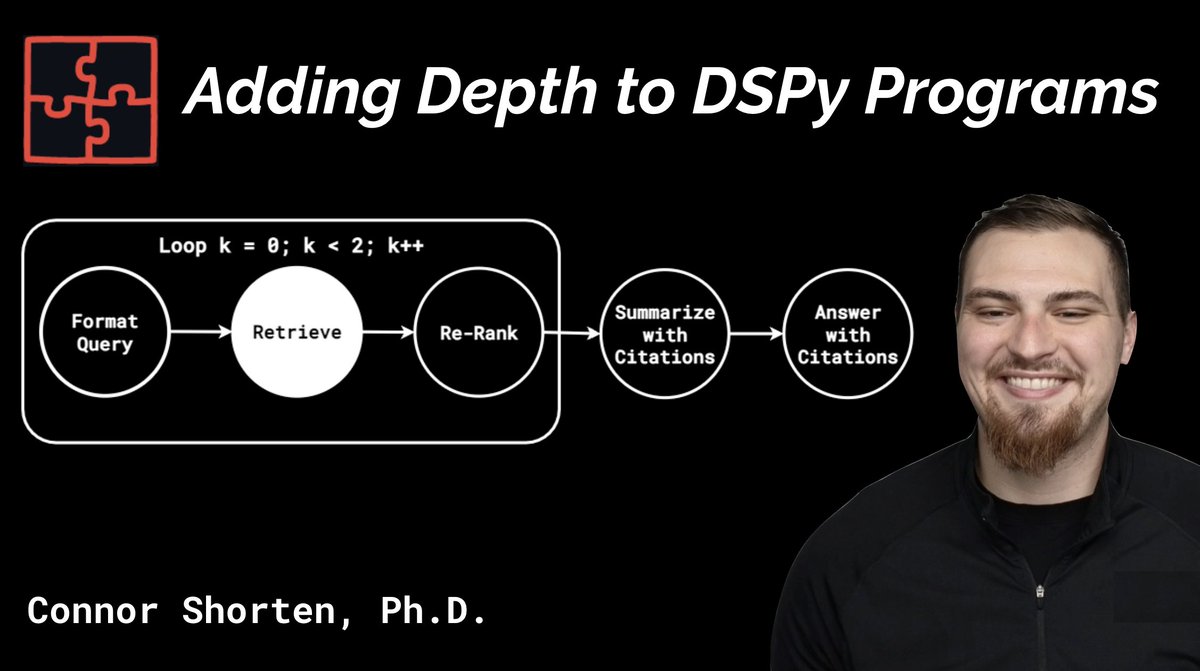

Adding Depth to DSPy Programs!! I am SUPER excited to share a new video exploring what it means to add depth to DSPy programs! 🚀 One of the headlines of DSPy is the comparison with PyTorch, whereas layers == sub-tasks as we decompose complex tasks like writing blog posts or question answering into their components. 🧱 This has all sorts of interesting aspects to it, this video begins by explaining how each component has an optimal task description, as well as unique input-output examples. We then explore how the BootstrapFewShot compiler not only sources examples for each of these sub-tasks, but also Chain-of-Thought reasonings for them! We then dive further into `BootstrapFewShot`, taking a look at the code to understand exactly how DSPy orchestrates using a teacher LM to trace through the program and produce these examples! 🧐 Another super powerful aspect of DSPy is the ability to compose Multi-Model systems, such as using Cohere's Command Nightly for the Query Wiriting, Mistral 7B for Summarization, and GPT-3.5-Turbo for Question Answering! 🔌 I hope you enjoy the video! As always the code examples can be found on GitHub at Weaviate/Recipes! 🧑🍳 YouTube: youtu.be/0c7Ksd6BG88

Most engaged tweets of Connor Shorten

DSPy is a SUPER exciting advancement for AI and building applications with LLMs!🧩🤯 Pioneered by frameworks such as LangChain and LlamaIndex, we can build much more powerful systems by chaining together LLM calls! This means that the output of one call to an LLM is the input to the next, and so on. We can think of chains as programs, with each LLM call analogous to a function that takes text as input and produces text as output. DSPy offers a new programming model, inspired by PyTorch, that gives you a massive amount of control over these LLM programs. Further the Signature abstraction wraps prompts and structured input / outputs to clean up LLM program codebases. 🧼 DSPy then pairs the syntax with a super novel *compiler* that jointly optimizes the instructions for each component of an LLM program, as well as sourcing examples of the task. ⚙️ Here is my review of the ideas in DSPy, covering the core concepts and walking through the introduction notebooks showing how to compile a simple retrieve-then-read RAG program, as well as a more advanced Multi-Hop RAG program where you have 2 LLM components to be optimized with the DSPy compiler! I hope you find it useful! 🎙️ youtube.com/watch?v=41EfOY…

Unfortunately, Large Language Models will not consistently follow the instructions that you give them. This is a massive problem when you are building AI systems that require a particular type of output from the previous step to feed into the next one! For example, imagine you are building a blog post writing system that first takes a question and retrieved context to output a list of topics. These topics have to be formatted in a particular way, such as a comma-separated list or a JSON of Topic objects, such that the system can continue writing the blog post! 🤝 I am SUPER excited to share the 4th video in my DSPy series, diving into 3 solutions to structuring outputs in DSPy programs: (1) TypedPredictors, (2) DSPy Assertions, and (3) Custom Guardrails with the DSPy programming model! 📖 TypedPredictors follow the line of thinking around JSON mode and using Pydantic BaseModels to interface types and custom objects into a JSON template for LLMs. The output can then be validated to provide a more structured retry prompt to correct the output structure! 🛠️ DSPy Assertions are one of the core building blocks of DSPy, offering an interface to input a boolean-valued function and a retry prompt which is templated alongside the past output to retry the call to the LLM! ⚠️♻️ Custom Guardrails with the DSPy Programming Model are one of the things I love the most about DSPy — we have unlimited flexibility to control these systems however we want. The video will also show you how to write custom guardrails and retry Signatures and discussion around using TypedPredictors for your Custom Guardrails and potentially feeding your Custom Guardrails into a DSPy Assertion. 💥 I had so much fun exploring this topic! Further seeing how well OpenAI’s GPT-4 and GPT-3.5-Turbo, Cohere’s Command R, and Mistral 7B hosted with Ollama perform with each Structured Output strategy! I also found monitoring structured output retries to be another fantastic application of Arize Phoenix! I hope you find the video useful! 🎙️ youtube.com/watch?v=tVw3Cw…

I am BEYOND EXCITED to publish our interview with Krista Opsahl-Ong (@kristahopsalong) from @StanfordAILab! 🔥 Krista is the lead author of MIPRO, short for Multi-prompt Instruction Proposal Optimizer, and one of the leading developers and scientists behind DSPy! This was such a fun discussion beginning with the motivation of Automated Prompt Engineering, Multi-Layer Language Programs, and their intersection. We then dove into the details of how MIPRO achieves this and miscellaneous topics in AI from Self-Improving AI Systems to LLMs and Tool Use, DSPy for Code Generation, and more! I really hope you enjoy the podcast! As always, more than happy to answer any questions or discuss any ideas about the content in the podcast!

Open-sourcing retrieve-dspy! 💻🚀 While developing Search Mode for Weaviate's Query Agent, we dove into the literature. It was amazing, and overwhelming, to see how many different takes on Compound Retrieval Systems there are! 📚 From perspectives on Reranking, such as to reason, or not to reason with Cross Encoders, Sliding Window Listwise Rankers, Top-Down Partitioning, Pairwise Ranking Policies, .... to Query Expansion, such as HyDE, LameR, ThinkQE, ..., Query Decomposition, Multi-Hop Retrieval, Adaptive Retrieval, and more... There are endless design decisions for building Compound Retrieval Systems!! 🛠️ Inspired by the work on LangProbe from @ShangyinT et al., retrieve-dspy is a collection of DSPy programs from the IR literature. I hope this work will help us better compare these systems such as HyDE vs. ThinkQE, or Cross Encoders vs. Sliding Window Listwise Reranking, and understand the impact of DSPy's optimizers! 🧩🔥 The first step of many, repo linked below!

The DSPy community is growing in Boston! ☘️🔥 We are beyond excited to be hosting a DSPy meetup on October 15th! Come meet DSPy and AI builders and learn from talks by Omar Khattab (@lateinteraction), Noah Ziems (@NoahZiems), and Vikram Shenoy (@vikramshenoy97)! See you in Boston, it will be an epic one!! 🎉 Sign up here - luma.com/4xa3nay1?tk=Dv…

I am SUPER EXCITED to publish the 100th episode of the Weaviate Podcast (💯🎙️🎉) with Lucas Negritto (@lucasteez) and Bob van Luijt (@bobvanluijt) on Generative UIs! 🖼️♻️ This is an amazing example of AI-native applications and the new generation of software! Rather than producing front-end code, what if the generative model just dynamically renders the application? This reminded me heavily of the GameGAN research from NVIDIA, super cool to see what Lucas is building with Odapt and how this idea is advancing! I thought the discussion of "Native Multimodality" was really fascinating! 🔊👀 This was such a fun conversation! I hope you find the podcast interesting and useful! YouTube: youtube.com/watch?v=x1i9pG…

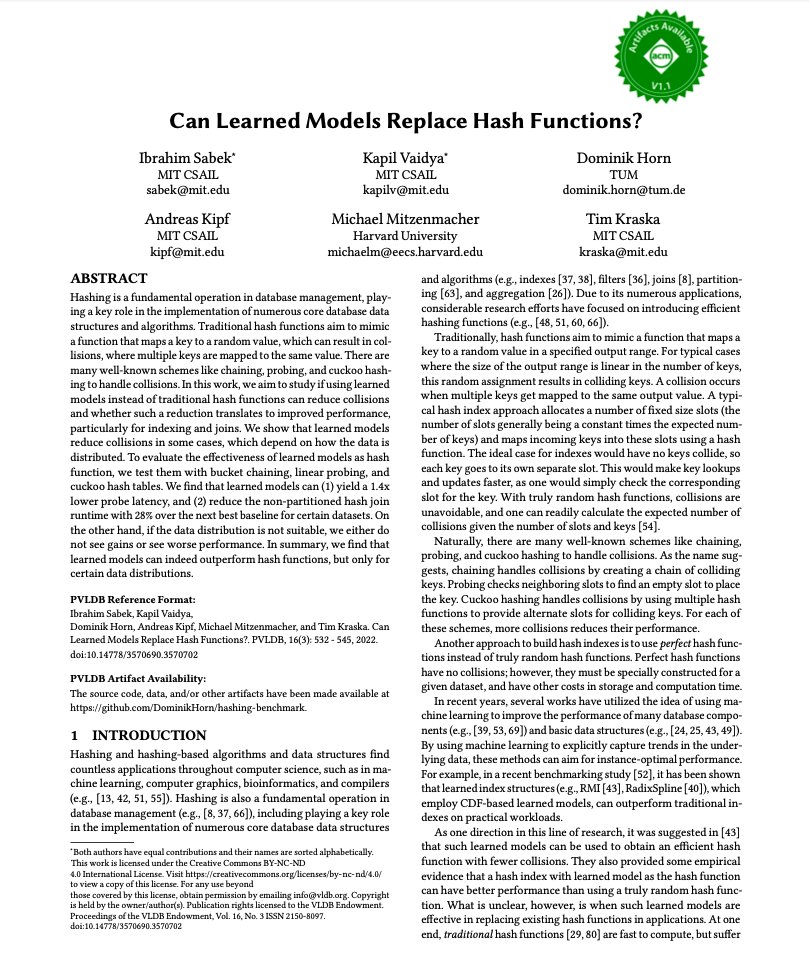

GPT-4o is awesome at parsing PDFs into text! 🤖📜 Here is an example, 👇 Text prompt: "Can you please transcribe the text in this image into a single blob of text?" Image input: (A base64 encoded string of the image attached to this tweet) Results in: ``` Can Learned Models Replace Hash Functions? Ibrahim Sabek MIT CSAIL sabek@mit.edu Kapil Vaidya* MIT CSAIL kapilv@mit.edu Dominik Horn TUM dominik.horn@tum.de Andreas Kipf MIT CSAIL kipf@mit.edu Michael Mitzenmacher Harvard University michaelm@eecs.harvard.edu Tim Kraska MIT CSAIL kraska@mit.edu ABSTRACT Hashing is a fundamental operation in database management, playing a key role in the implementation of numerous core database data structures and algorithms. Traditional hash functions aim to mimic a function that maps a key to a random value, which can result in collisions, where multiple keys are mapped to the same value. There are many well-known schemes like chaining, probing, and cuckoo hashing to handle collisions. In this work, we aim to study if using learned models instead of traditional hash functions can reduce collisions and whether such a reduction translates to improved performance, particularly for indexing and joins. We show that learned models reduce collisions in some cases, which depend on how the data is distributed. To evaluate the effectiveness of learned models as hash function, we test them with bucket chaining, linear probing, and cuckoo hash tables. We find that learned models can (1) yield a 1.4x lower probe latency, and (2) reduce the non-partitioned hash join runtime with 25% over the next best baseline for certain datasets. On the other hand, if the data distribution is not suitable, we either do not see gains or see worse performance. In summary, we find that learned models can indeed outperform hash functions, but only for certain data distributions. PVLDB Reference Format: Ibrahim Sabek, Kapil Vaidya, Dominik Horn, Andreas Kipf, Michael Mitzenmacher, and Tim Kraska. Can Learned Models Replace Hash Functions?. PVLDB, 16(3): 532 - 545, 2022. doi:10.14778/3570690.3570702 PVLDB Artifact Availability: The source code, data, and/or other artifacts have been made available at github.com/DominikHorn/ha…. 1 INTRODUCTION Hashing and hash-based algorithms and data structures find countless applications throughout computer science, such as in machine learning, computer graphics, bioinformatics, and compilers (e.g., [3, 12, 31, 55]). Hashing is also a fundamental operation in database management (e.g., [8, 37, 60]), including playing a key role in the implementation of numerous core database data structures and algorithms (e.g., indexes [37, 38], filters [36], joins [8], partitioning [63], and aggregation [26]). Due to its numerous applications, considerable research efforts have focused on introducing efficient hashing functions (e.g., [6, 18, 51, 60, 66]). Traditionally, hash functions aim to mimic a function that maps a key to a random value in a specified output range. For typical cases where the size of the output range is linear in the number of keys, this random assignment results in colliding keys. A collision occurs when multiple keys get mapped to the same output value. A typical hash index approach allocates a number of fixed size slots (the number of slots generally being a constant times the expected number of keys) and maps incoming keys into these slots using a hash function. The ideal case for indexes would have no keys collide, so each key goes to its own separate slot. This would make key lookups and updates faster, as one would simply check the corresponding slot for the key. With truly random hash functions, collisions are unavoidable, and one can readily calculate the expected number of collisions given the number of slots and keys [54]. Naturally, there are many well-known schemes like chaining, probing, and cuckoo hashing to handle collisions. As the name suggests, chaining handles collisions by creating a chain of collided keys. Probing checks neighboring slots to find an empty slot to place the key. Cuckoo hashing handles collisions by using multiple hash functions to provide alternate slots for colliding keys. For each of these schemes, more collisions reduce their performance. Another approach to build hash indexes is to use perfect hash functions instead of truly random hash functions. Perfect hash functions have no collisions; however, they must be specially constructed for a given dataset, and have other costs in storage and computation time. In recent years, several works have utilized the idea of using machine learning to improve the performance of many database components (e.g., [39, 55, 69]) and basic data structures (e.g., [24, 25, 43, 49]). By using machine learning to explicitly capture trends in the underlying data, these methods can aim for instance-optimal performance. For example, in a recent benchmarking study [52], it has been shown that learned index structures (e.g.,RMI [43], PGM [15], SlotMachine [40]), which employ CDF-based learned models, can outperform traditional indexes on practical workloads. As one direction in this line of research, it was suggested in [43] that such learned models can be used to obtain an efficient hash function with fewer collisions. They also provide some empirical evidence that a hash index with learned models as the hash function can have better performance than using a truly random hash function. What is unclear, however, is when such learned models are effective in replacing existing hash functions in applications. At one end, traditional hash functions [29, 48] are fast to compute, but suffer ```

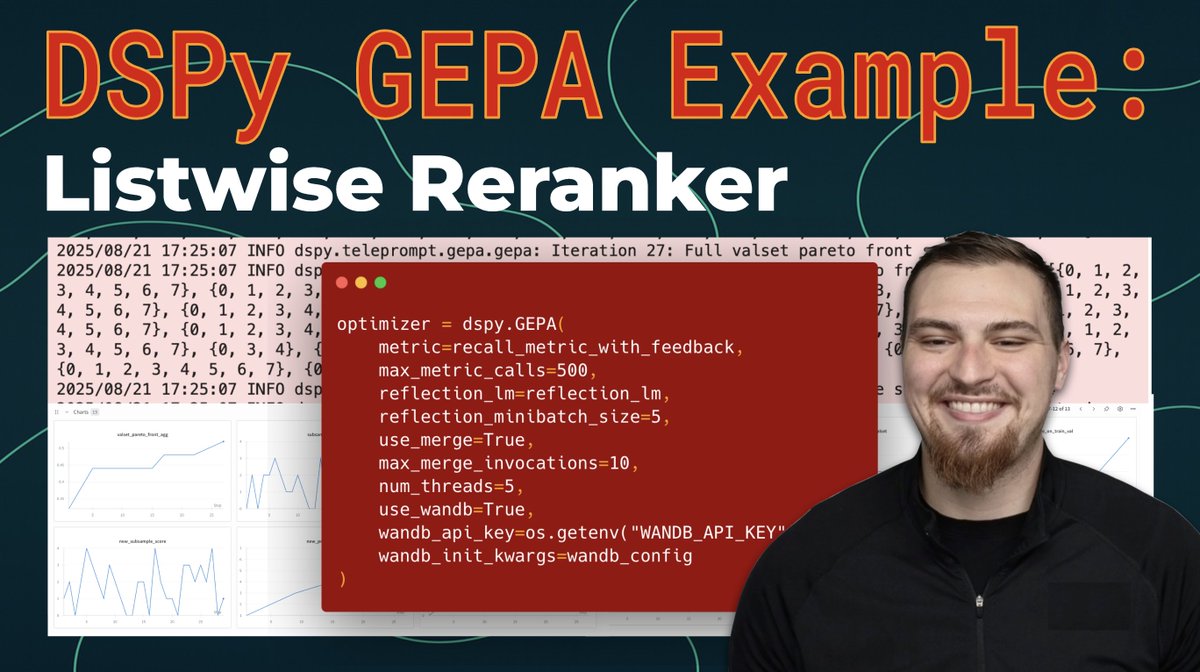

I am SUPER EXCITED to publish the 127th epsiode of the Weaviate Podcast featuring Lakshya A. Agrawal (@LakshyAAAgrawal)! 🎙️🎉 Lakshya is the lead author of GEPA: Reflective Prompt Evolution can Outperform Reinforcement Learning! GEPA is a huge step forward for automated prompt optimization, @DSPyOSS, and the broader scope of integrating LLMs with optimization algorithms! The podcast discusses all sorts of areas of GEPA from the Reflective Prompt Mutation to Pareto-Optimal Candidate Selection, Test-Time Training, the LangProBe Benchmark, and more!! 📜 I had so much fun chatting about these things with Lakshya! I really hope you enjoy the podcast! 🎙️

People with Innovator archetype

Building @everyrank & Brainbox @3pe1x | Engineer @ http://nidana(dot)io | AI, privacy-first

Partner@ MetaLabs.Capital CEO@ 8848.Studio

Engineer | Building Exampredict AI | EP Talk

Since 2018 in Web3

Think smarter, not harder. Meet your brain's new best friend 📒

ceo @afterquery. prev statistics + finance + cs @ wharton / penn, @silverlake_news, @google, @morganstanley, @meta

伱不湜我,所姒伱鈈会眀白伱對莪の薏図 髒愛呮會怺遠茬 芣圵web2還洧web3

AWS-Bedrock | Ex-AWS-SageMaker | Ex-Meta | Working with 🧠🤖💥

Student Chasing a future where 9–5 is Plan B Building automations and sharing experiences

AI engineer at luca-app.de • End-to-end design & development at webvise.io

⬛️ Tech Polymath. ~:Sss python | PowerShell ⬛️ Freedom, AI, Discord, InfoSec, VMs, Infra ⬛️ ❤️ think, pika, replicate, creatr, fal

ME/AE/Dev - Unemployed - Worked on Aurora Semi Truck at Conti - Ex EDU Company Founder - Automater of Homesteads

Explore Related Archetypes

If you enjoy the innovator profiles, you might also like these personality types: